An AI Visibility Score measures how likely your content is to be understood, trusted, and cited by AI search systems—not how well it ranks in traditional search results. In 2026, rankings describe where you appear. AI visibility describes whether you exist at all in AI-generated answers.

Key points

- AI Visibility Scores evaluate whether AI systems recognize, interpret, and safely reuse your brand—not just whether pages reach high SERP positions.

- Entity recognition, semantic coverage, structural extractability, and citation safety form the core dimensions that govern your presence across AI answers.

- Modern teams layer traditional SEO metrics with AI visibility diagnostics so they can steward crawl health, interpretation clarity, and citation readiness at once.

Introduction: Rankings Describe Placement, AI Visibility Describes Existence

AI search systems no longer hand users a list of ten blue links and invite them to evaluate each option. They produce synthesized, direct responses that blend facts, definitions, frameworks, and recommendations into a single answer. In that answer, the system either cites you or it does not. It either expresses your point of view faithfully or it paraphrases someone else. When people ask for a recommendation, research primer, or diagnostic framework, the answer engine already filtered every source through its own internal trust ranking before the user ever sees your URL. That change forces a new measurement vocabulary.

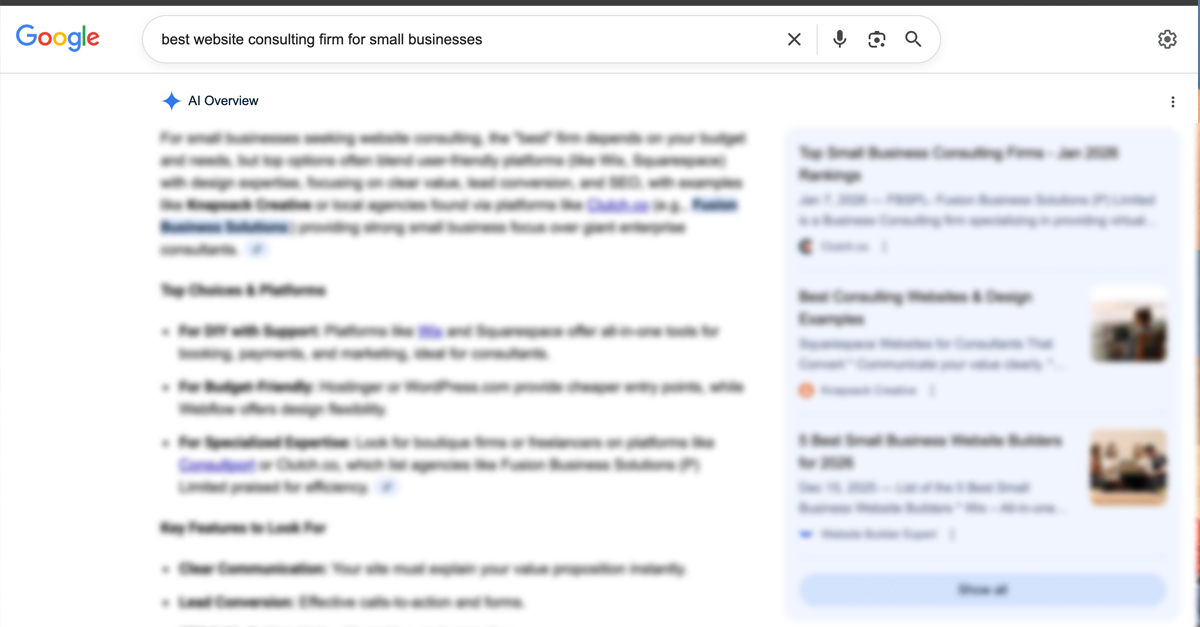

An AI Visibility Score captures how well your pages survive that invisible filtering. Instead of celebrating a #1 ranking, you monitor whether Copilot for Search quotes your data, whether Google’s AI Overviews lists you as a cited authority, and whether Perplexity selects your explainer to ground its bullets. Rankings describe placement. AI visibility describes whether you exist inside the answers people now consume.

The change can feel uncomfortable. Rankings are tangible and comparable. AI visibility is probabilistic and interpretive. Yet the brands that adopt this dual perspective faster are already adapting their content strategy, measurement stack, and stakeholder reporting to reflect the realities of 2026. They understand that an AI Visibility Score does not replace SEO—it widens the aperture so that they can see past the SERP into the answer itself.

The Measurement Problem Modern Search Created

The last two decades conditioned teams to equate success with rankings, traffic, and clicks. Search engines produced familiar interfaces, and user journeys fit predictable funnels. You optimized for keywords, built authoritative backlinks, and monitored analytics dashboards for incremental lifts. Those metrics worked because engines returned links, and users clicked them. The entire industry could rally around rank trackers, keyword difficulty, and conversion attribution models built on click streams.

Modern AI search systems broke that model. People now ask conversational questions that contain context, intent, and ambiguity. Systems generate answers in natural language, often blending multiple sources into an integrated explanation. Many of those answers produce zero clicks because the answer lives in the interface itself. Some experiences surface citations, but the UI frequently prioritizes clarity over linking. Other experiences, especially inside closed assistants, do not show sources at all, even though the underlying systems rely on them. The measurement stack designed for clicks cannot capture what just happened.

This gap manifests in weekly reporting. A marketing team sees stable rankings and traffic but notices that buyers now reference AI-generated briefs during sales calls. Support teams hear customers say “Perplexity recommended you for this part of the workflow but suggested a competitor for the rest.” The data warehouse reflects business outcomes, yet the instrumentation between search behavior and site visits is thin. Without a new metric, teams risk misinterpreting high rankings as comprehensive visibility while AI systems gradually shift recommendations elsewhere.

That measurement gap is why AI Visibility Scores exist. They give teams a way to quantify whether the brand, the people, and the content actually appear in the answers users see—even when the analytics trail goes cold. They translate the invisible interpretive layer of AI systems into trackable signals that complement, not replace, the traditional SEO dashboard.

Why AI Visibility Scores Exist

The idea is simple: if AI arbitrates whether your perspective enters the conversation, you need the equivalent of a media monitoring system for AI answers. Instead of counting column inches or press mentions, you track citations, entity recognition, and how consistently your narratives show up across engines. AI Visibility Scores package those observations into a scalable metric that allows benchmarking, prioritization, and experimentation.

Brands that adopt AI Visibility Scores treat them as executive-language proof that investments in structured data, entity work, and content architecture matter. Instead of telling leadership “we improved schema coverage,” they can demonstrate “our AI visibility rose in the topics that align with our product line, and we now appear in more answer experiences than we did last quarter.” The metric bridges the historical gap between technical SEO work and business storytelling by translating interpretive clarity into a number stakeholders can understand.

Most importantly, AI Visibility Scores give teams the confidence to widen their focus. When you know how AI systems describe you, you can decide whether to double down on a narrative, clarify an offering, or rehabilitate a misinterpreted concept. Without the score, teams either assume rankings equal presence or chase disconnected optimizations without feedback. With it, they can map blind spots, allocate resources, and track progress with the same rigor they apply to traffic forecasts.

What an AI Visibility Score Is (Clear Definition)

An AI Visibility Score measures how visible your brand, pages, and entities are inside AI-generated answers—not search results. The score asks whether AI systems recognize your brand, understand what you do, trust your content, and reuse or cite your pages when they assemble answers. It captures a different layer of reality than rankings. Rankings tell you where you sit on a results page. AI visibility tells you whether the systems that now summarize the web include you in the conversation.

To calculate the score, teams blend observable signals: instances where AI assistants quote your copy, appearances in AI overview panels, entity references in generated briefings, and qualitative audits that evaluate how accurately the answer conveys your positioning. Each observation carries weight based on recency, topical alignment, and prominence. Instead of a single data source, the score synthesizes a lattice of signals that collectively describe your footprint in AI-mediated discovery.

Because the score measures interpretive presence, it benefits from the same discipline you already apply to analytics. Teams define the scope (brand-level, product-level, or topic-level), align on which engines to monitor, and document how to handle partial citations or paraphrased references. The result is a consistent, repeatable metric that can show trends over time even as individual answer interfaces evolve.

What an AI Visibility Score Is Not

To avoid confusion, it helps to say what the score does not represent. An AI Visibility Score is not a keyword ranking metric, a traffic metric, a backlink score, a proxy for Domain Authority, or a prediction of clicks. Those belong to traditional SEO. AI visibility measures interpretability and reuse, not competition for SERP positions.

You cannot infer the score from a rank tracker because AI systems do not select sources based on keyword placement. You cannot use it as a traffic proxy because many AI answer experiences produce zero clicks by design. Backlinks still matter as trust signals, but they are insufficient to predict whether an answer engine will quote you. Domain Authority hints at general reputation, yet AI systems validate freshness, clarity, and alignment at the entity level. And while high AI visibility can correlate with traffic in experiences that still drive visits, the score itself does not attempt to forecast sessions.

Understanding what the score is not protects teams from misapplication. It keeps reporting honest and ensures stakeholders recognize AI visibility as an additive layer. When someone asks whether a dip in AI visibility means the SEO team failed, you can explain that the score monitors different levers. That clarity prevents reactive strategy swings and maintains focus on the interpretive improvements that actually influence AI answers.

Why Rankings Became an Incomplete Signal

A page can rank first, load fast, pass every SEO audit, and sport an enviable backlink profile. It can dominate traditional metrics and still never appear in AI overviews, never be cited by ChatGPT, and never show up in Perplexity answers. The disconnect feels counterintuitive until you recognize that AI systems evaluate different criteria. They do not ask “Who ranks highest?” They ask “Which sources can I safely use to answer this question?”

Ranking algorithms signal relevance and authority within the context of keyword-driven search. AI systems evaluate interpretability, confidence, and the risk of misinforming a user. They inspect whether the content is structured in a way they can parse, whether the entity relationships are unambiguous, whether the tone is balanced, and whether claims align with corroborating signals. Rankings alone cannot guarantee those qualities.

This distinction matters because teams were trained to equate top placement with comprehensive visibility. When AI engines shift the battleground to answer generation, that assumption breaks. Monitoring rankings without AI visibility creates blind spots. You may celebrate a keyword win while your buyers consume AI briefings that reference competitors. You may invest in high-volume keywords while answer engines rely on lower-volume but conceptually rich pages. Recognizing the incomplete nature of rankings frees teams to examine the deeper layers that AI systems actually use.

The Core Question AI Visibility Answers

Traditional SEO asks “Where do we rank?” AI visibility asks “Do AI systems acknowledge us as a valid source at all?” If the answer is no, rankings do not matter. That single question reorients strategy. It pushes teams to examine whether their entity definitions align across the site, whether structured data reflects reality, whether content provides the contextual scaffolding AI needs to summarize accurately, and whether the brand’s point of view is documented with enough depth to be reusable.

The question also surfaces opportunity. If AI systems already acknowledge you in some topics but not others, you can study the difference. Maybe certain pages contain richer structured data, or maybe the team published a long-form guide that answers the types of questions assistants receive. Comparing high-visibility and low-visibility topics reveals patterns you can replicate. The score therefore acts as both a diagnostic and a roadmap generator.

What an AI Visibility Score Actually Measures

An AI Visibility Score evaluates four primary dimensions, each invisible to classic SEO tools: entity recognition and resolution, semantic coverage, structural extractability, and trust plus citation safety. Each dimension contains multiple sub-signals that collectively describe how AI systems perceive your content. Some brands bundle them into one composite score. Others track each dimension separately to pinpoint strengths and weaknesses. Either way, the emphasis shifts from keyword placement toward interpretive fidelity.

Because these dimensions interact, improvements in one area often elevate another. Sharpening entity definitions helps structural extractability because the system can map references more easily. Expanding semantic coverage increases trust because the content demonstrates comprehensive expertise. Investing in citation safety encourages better schema alignment, which in turn supports entity recognition. Treating the dimensions as a holistic system keeps teams from over-optimizing a single lever at the expense of the others.

Dimension 1: Entity Recognition and Resolution

AI systems operate on entities, not URLs. When a user asks a question, the system decomposes the prompt into entities, relationships, and intents. It cross-references any relevant knowledge graphs, internal embeddings, or retrieved documents. If your brand is recognized as a distinct entity with stable attributes, the system can confidently pull from your material. If your entity is ambiguous, conflated with another company, or inconsistently described, the system hesitates.

An AI Visibility Score evaluates whether your brand is recognized as a distinct entity, whether that entity is consistently described across pages, whether products, services, and roles are unambiguous, and whether entity meaning drifts over time. This directly connects to the problem explored in fixing knowledge graph drift. If entity signals conflict, AI systems reduce confidence—and visibility drops.

Practical work in this dimension includes auditing how your brand appears in schema, bios, case studies, product descriptions, and media coverage. It involves aligning naming conventions, reinforcing canonical spellings, and clarifying relationships between parent brands, sub-brands, and offerings. Teams often create entity definition repositories that document preferred labels, alternative names, and precise descriptions. They ensure that structured data references those definitions and that internal linking reflects the same relationships. Over time, these practices reduce ambiguity and boost AI recognition.

It is equally important to monitor external signals. AI systems draw from public data, review sites, and partner pages. If external descriptions conflict with your preferred narrative, your visibility can suffer even if your site is consistent. Therefore, the entity recognition dimension extends beyond your domain. It includes outreach, partnership alignment, and content updates that encourage ecosystem coherence. When everything points to the same definition, AI systems resolve your entity faster and assign higher confidence to your pages.

Dimension 2: Semantic Coverage (Not Keyword Coverage)

Traditional SEO measures keyword presence, density, and per-query ranking. AI visibility evaluates topic completeness, conceptual coverage, relationship clarity between ideas, and whether content answers questions holistically. Assistants care about whether your material can support a conversation, not whether you repeated an exact phrase. The best-performing pages read like comprehensive guides that anticipate adjacent questions, clarify edge cases, and stitch together concepts into frameworks that an AI can reuse.

To score well on semantic coverage, your content needs to map the conceptual terrain around each topic. That includes definitions, context, comparisons, step-by-step breakdowns, troubleshooting advice, and strategy guidance. Instead of scattering this information across multiple thin posts, teams produce deep, evergreen resources that consolidate knowledge. They cross-link supporting assets so that retrieval systems can follow the semantic graph and gather everything required to build an answer.

Semantic coverage also relies on structural cues. Headings, subheadings, summary boxes, and tables help AI systems understand the hierarchy of ideas. Glossaries define terms. Callouts spotlight crucial distinctions. FAQ sections capture the long-tail questions that assistants frequently receive. By designing content for conceptual completeness, you prepare it to function as a modular knowledge source. The resulting AI visibility improvement is a byproduct of that intentional architecture.

Because coverage is never static, teams schedule regular reviews. They compare high-visibility topics against evolving user questions, competitor narratives, and product updates. They capture new questions from sales calls, support tickets, and community threads. They treat content as a living knowledge base that must keep pace with how people talk about the topic. This ongoing stewardship keeps semantic coverage aligned with market reality.

Dimension 3: Structural Extractability

AI systems favor content that can be parsed cleanly, quoted accurately, and summarized without distortion. Structural extractability captures how well your page layout, markup, and narrative design support that goal. If the system can identify the beginning and end of an explanation, pull a definition without ripping it from context, and cite you without misrepresenting your stance, you score higher.

An AI Visibility Score evaluates section clarity, self-contained explanations, logical progression, and explicit definitions or comparisons. This is why pages optimized using principles from answer capsules for LLMs consistently perform better in AI search. Clear heading hierarchy, descriptive anchor links, consistent paragraph length, and strategically placed summary elements all contribute. So do structured data patterns like `HowTo`, `FAQPage`, or `QAPage` when they accurately reflect the content.

Teams working on extractability often collaborate with UX writers and designers. They ensure the visual design supports scanning, that inline components like tabs or sliders do not hide critical information, and that the HTML remains accessible. They annotate sections with IDs so that assistants can deep link to specific insights. They balance rich media with text alternatives so AI systems can interpret the content even if they cannot render the visuals. Each of these decisions turns the page into a reliable knowledge object.

Testing plays a crucial role. Teams run experiments by feeding their pages into retrieval-augmented generation workflows to see how easily the system quotes them. They analyze how often AI assistants truncate sentences or skip steps, then adjust structure accordingly. Over time, this iterative refinement creates content that behaves predictably inside answer engines, driving higher extractability scores.

Dimension 4: Trust and Citation Safety

AI systems are conservative. They avoid citing content that feels overly promotional, ambiguous, inconsistent, or unsupported by structure or schema. An AI Visibility Score measures tone neutrality, claim grounding, alignment between content and structured data, and overall “safe-to-cite” signals. If the system senses that quoting you could mislead the user, it will choose a different source—even if that source ranks lower in traditional search.

Trust starts with clarity. Clearly attribute claims, differentiate between opinion and evidence, and link to supporting resources when appropriate. Ensure that structured data claims match on-page statements. Keep copy updated so AI systems do not encounter stale references or discontinued offerings. When you acknowledge limitations, explain assumptions, and provide transparent context, you signal that your content can be reused without the system shouldering reputational risk.

Safety also involves tone. AI assistants prefer balanced language that helps users make informed decisions. Over-promising, using sensational headlines, or burying disclaimers can erode confidence. By contrast, pages that guide readers through nuanced explanations, present multiple perspectives, and clarify next steps feel more reliable. Teams often create editorial guidelines specifically for AI-safe copy: emphasize clarity over hype, cite internal resources carefully, and write with the assumption that your words may be read aloud or summarized.

Finally, alignment matters. Schema governance ensures that structured data, on-page copy, and external profiles tell the same story. When everything matches, AI systems perceive higher integrity and are more willing to attribute answers to you. This is the heart of designing content that feels safe to cite for LLMs. It is not about toning down your voice—it is about building the confidence that whatever the assistant quotes will stand up to scrutiny.

Why Backlinks Alone No Longer Guarantee Visibility

Backlinks still matter—but mostly as background trust signals. In AI search, links do not explain meaning, resolve ambiguity, or prevent misinterpretation. AI visibility depends more on clarity, consistency, structure, and entity alignment. This is why small brands can outperform large ones in AI answers, as explored in the big brand bias in AI search and how small brands can still win .

Link-building campaigns designed for traditional SEO can support AI visibility when they strengthen brand recognition or drive authoritative mentions. However, without the interpretive layer, links cannot guarantee citations. An assistant evaluating two sources may prefer a smaller site with impeccable entity clarity over a legacy domain with generic copy. The signal-to-noise ratio matters more than raw domain metrics.

That does not mean backlinks are obsolete. They still influence crawl discovery, reinforce knowledge graph associations, and validate expertise. The difference is that they now act as part of a broader evidence stack. Teams track backlink campaigns alongside AI visibility improvements to ensure they understand which links contribute to interpretive confidence. They treat backlinks as one signal among many rather than the singular driver of organic success.

How an AI Visibility Score Differs From Rankings (Side-by-Side)

Comparing rankings and AI visibility clarifies their complementary nature. Rankings measure position in SERPs, are query-specific, competitive, click-oriented, and page-level. AI Visibility Scores measure presence in AI answers, are topic-level, interpretive, citation-oriented, and entity-level. They answer different questions and should never be conflated.

Visualizing the difference helps stakeholders. A side-by-side dashboard might show keyword position trends next to AI citation frequency. When rankings climb but AI visibility stagnates, the team knows to inspect entity clarity or extractability. When AI visibility rises without rank changes, they can attribute broader awareness gains to interpretive improvements. The interplay becomes a diagnostic tool that reveals where to invest next.

Because the metrics examine different layers, success often requires optimizing the same page twice—once for rankings, once for visibility. The same deep guide can satisfy both if it balances crawl accessibility with interpretive clarity. But in many cases, teams augment a high-ranking page with additional schema, restructured sections, or supporting assets crafted specifically for AI retrieval. The goal is not to choose between ranking and visibility; it is to orchestrate both.

Why AI Visibility Matters More Than Rankings in 2026

Because in AI search, being cited matters more than being clicked, being understood matters more than being indexed, and being trusted matters more than being optimized. If your brand is invisible to AI systems, you effectively do not exist in a growing share of search experiences. Users who rely on conversational assistants will never hear your name. Buyers who gather requirements through AI briefings will never see your distinctions. Analysts researching market landscapes will map categories without you.

This is not hypothetical. Teams already observe that buyers arrive with AI-generated notes summarizing competitors. Journalists request clarifications because an assistant misattributed a quote. Support teams receive tickets referencing AI-suggested instructions. Without visibility, you forfeit influence over those conversations. Rankings remain valuable for the portions of search that still behave traditionally, but visibility decides whether you participate in the new interface layer.

Prioritizing AI visibility also unlocks resilience. Algorithms change, interfaces evolve, and new engines emerge. The brands that focus on clarity, structured knowledge, and trustworthy storytelling adapt faster because those qualities travel well across platforms. Whether the next interface is a wearable assistant, an enterprise concierge, or an in-car infotainment system, the underlying requirement stays the same: AI must feel confident citing you. Investing in that confidence today protects your presence tomorrow.

The Measurement Shift: From Traffic to Presence

This is the same shift described in AI visibility vs traditional rankings: new KPIs for modern search. Modern teams track citation frequency, entity coverage, topic authority, and inclusion in AI summaries. Traffic remains important, but it is no longer the sole proxy for impact.

Presence-based metrics reframe executive storytelling. Instead of reporting “sessions fell five percent,” teams contextualize the change: “AI visibility in our core topics rose, but the assistant delivered full answers, so fewer users clicked through. We are still referenced, and the answer aligns with our narrative.” That nuance helps leadership understand the evolving landscape and avoids misinterpreting traffic dips as failures.

Presence also introduces new accountability loops. Product marketing wants to know whether the brand’s differentiators appear in AI briefings. Customer success wants to confirm that onboarding instructions are accurately summarized. Legal wants assurance that disclaimers travel with the advice. By measuring presence, teams can validate each of these concerns and plan remediation when the answer falls short.

How AI Visibility Scores Are Used in Practice

High-performing teams use AI Visibility Scores to identify which topics AI systems associate with their brand, detect gaps between ranking pages and cited pages, diagnose why AI answers misattribute expertise, and track improvement after structural or schema changes. This pairs naturally with diagnostics from the AI SEO Checker and validation via the Schema Generator.

Operationally, teams schedule recurring scans across major engines. They maintain libraries of prompts that mirror user questions, buying scenarios, troubleshooting tasks, and executive research queries. They monitor whether the assistant cites their resources, how it describes the brand, and whether the answer remains faithful to the product’s capabilities. They record qualitative observations alongside quantitative scores so that context travels with the numbers.

When a scan reveals low visibility in a priority topic, the team investigates each dimension. They inspect entity definitions for conflicts, review semantic coverage for gaps, test extractability by running retrieval experiments, and evaluate whether the tone and evidence feel citation-safe. They document hypotheses, implement improvements, and rerun the scan. Over time, these iterative cycles create a feedback loop similar to conversion optimization—except the goal is to optimize trust, clarity, and reuse.

Common Misinterpretation: “Low AI Visibility Means Bad SEO”

Low AI visibility usually means inconsistent entity definitions, fragmented content, weak structural clarity, or schema misalignment. These issues often exist despite strong SEO performance. That is why the score exists. It surfaces interpretive weaknesses that rankings overlook. Telling a team with healthy rankings that visibility dropped is not an accusation—it is an invitation to examine a different layer.

Explaining this distinction to stakeholders is essential. You can maintain technical excellence, win competitive keywords, and still lack presence in AI answers because the system does not understand how your offerings relate. Conversely, you can have modest rankings but appear in numerous AI briefings because your knowledge base is coherent, structured, and trustworthy. Teaching stakeholders to separate these outcomes avoids misplaced blame and encourages investment in the interpretive layer.

Use analogies to make the point. Traditional SEO is like ensuring your book is shelved in the right aisle of a library. AI visibility is like ensuring librarians recommend your book when patrons ask for advice. You can be perfectly cataloged yet never recommended if the summary on your dust jacket is unclear or if reviewers misinterpret your genre. Both cataloging and recommendation matter. Low AI visibility signals a recommendation problem, not a shelving error.

Example Pattern Seen Repeatedly

A company ranks well for dozens of keywords. AI visibility analysis reveals that AI systems associate the brand with only one narrow topic. Broader offerings are invisible, and competitors are cited for adjacent questions. This is not an SEO failure. It is an interpretation failure.

When teams encounter this pattern, they often discover that their content strategy mirrored old keyword maps. Each page targeted a single phrase with minimal context. Supporting assets were siloed by campaign rather than integrated into a knowledge graph. As a result, AI systems perceived the brand as a niche expert rather than a multi-solution provider. The remedy involves consolidating thin assets into comprehensive guides, aligning structured data across products, and documenting cross-page relationships so retrieval systems connect the dots.

Another common variant occurs when a brand’s thought leadership introduces new terminology without linking it to established concepts. AI systems struggle to map the novel term, so they ignore it. The brand appears invisible in conversations about the broader category even though it leads innovation. Mapping new terms to known entities, providing comparative sections, and offering definitional clarity allow the assistant to understand and reuse the narrative. Visibility rises when the system no longer perceives your language as an outlier.

How AI Visibility Scores Complement Traditional SEO

The healthiest stacks combine traditional SEO audits for crawl, index, and rankings; AI SEO diagnostics for entity and content clarity; and AI Visibility Scores for measurement and tracking. This layered approach is outlined in the modern AI SEO toolkit: 3 tools every website needs for 2026. Each layer informs the others.

The crawl layer ensures engines can access, render, and index your pages. The AI SEO diagnostics layer ensures those pages communicate meaning unambiguously. The visibility layer confirms whether AI systems actually reuse the content. When these disciplines collaborate, the site becomes stable, interpretable, and influential. When one is missing, the system weakens. For example, strong diagnostics without crawl health can still limit visibility because assistants cannot retrieve the page. Strong crawl health without diagnostics can produce ambiguous content that assistants ignore. Visibility metrics tie the system together by showing whether the combined effort delivers citations.

Why AI Visibility Is Harder to Fake

Rankings can be manipulated, temporarily boosted, or influenced by authority alone. AI visibility requires internal consistency, long-term clarity, structured knowledge, and cross-page alignment. It rewards maturity, not shortcuts. You cannot cheat your way into being cited if the assistant cannot understand you. Attempting to game the system with shallow content or exaggerated claims backfires because it erodes the trust dimension.

This difficulty is an advantage. It protects the ecosystem from the most aggressive forms of manipulation and encourages sustainable practices. Teams that commit to entity stewardship, content governance, and structured data excellence enjoy compounding benefits. Their knowledge base becomes a durable asset that continues to attract citations even as interfaces evolve. Those who chase quick wins see temporary bumps, but the assistant will eventually prefer sources with stable interpretive signals.

Strategic Implications for Content Teams

AI Visibility Scores change how teams plan content, measure success, prioritize updates, and justify investment. They encourage long-form assets that function as canonical references. They reward cross-functional collaboration because schema, design, and editorial choices intersect. They push teams to document their brand story in explicit terms rather than relying on implicit understanding.

Content roadmaps shift from keyword lists to topical knowledge architectures. Editorial teams map expert interviews into structured narratives. Designers collaborate on modular components that support extractability. Analysts develop prompt libraries to monitor AI answers. Stakeholders request visibility updates alongside traffic reports. The entire operation becomes more knowledge-centric and less campaign-driven.

This shift also affects hiring and vendor relationships. Teams look for writers who understand structured data, strategists who can translate qualitative insights into schema updates, and analysts who can operate AI monitoring tools. Agencies that deliver AI-ready content align with in-house governance practices. Procurement conversations include questions about entity stewardship. The organization evolves to support visibility as a core competency.

Operating Model: Building the AI Visibility Practice

Establishing an AI visibility practice involves intentional roles, rituals, and documentation. Many teams appoint a visibility lead who coordinates audits, monitors scores, and maintains the roadmap. They create cross-functional councils that include SEO, content, product marketing, analytics, and engineering. These teams meet on a cadence—often monthly—to review visibility trends, share qualitative findings, and prioritize experiments.

The operating model typically includes three loops. The discovery loop monitors AI answers, records citations, and captures deviations. The insight loop analyzes why visibility shifted by mapping changes back to entity work, content updates, or structural enhancements. The delivery loop implements improvements, ranging from schema edits to narrative rewrites. Each loop feeds the others. Discovery alerts the team to issues, insight explains them, and delivery resolves them.

Documentation underpins everything. Teams maintain playbooks that define their scoring methodology, prompt libraries, review checklists, and governance policies. They record every change in a change log—what was updated, why it matters, which dimension it targets, and when the next review is scheduled. This operational rigor ensures continuity even as personnel changes. It also gives stakeholders confidence that AI visibility is not a side project but a disciplined practice.

Diagnostics, Evidence, and Instrumentation

Diagnostics translate the qualitative behavior of AI systems into actionable data. Teams use answer scraping tools, manual spot checks, and structured testing flows to capture how assistants cite them. They categorize results by engine, topic, intent, and answer type. They annotate each observation with context—whether the assistant paraphrased accurately, whether it mixed in competitor messaging, whether disclaimers remained intact.

Instrumentation often includes internal dashboards that merge visibility data with traditional metrics. A page might show traffic trends, conversion performance, entity clarity scores, schema coverage, and AI visibility in one view. Analysts can then spot correlations. They notice that visibility jumps when the team publishes a new explainer or that it dips when schema drifts out of sync. These insights inform prioritization and help justify resource allocation.

Evidence gathering extends beyond the site. Customer interviews, sales feedback, and support logs reveal how AI-generated narratives influence perception. Competitive intelligence tracks which rivals appear in AI answers and what claims they make. Teams feed this qualitative evidence into their visibility practice so that improvements align with real-world conversations.

Structured Data Governance as the Bridge

Structured data sits at the intersection of traditional SEO and AI visibility. It informs crawling, indexing, and snippet generation while also feeding the knowledge graphs and retrieval systems that power AI answers. Governance ensures that schema remains accurate, consistent, and aligned with on-page messaging.

Teams often treat schema as infrastructure. They version it, review it, audit it, and link every claim to a canonical source. They maintain schema component libraries so that new pages inherit consistent patterns. They validate schema against both search guidelines and internal reality. When product details change, the schema update is part of the release checklist. When the brand launches a new service, the entity definitions and schema types update in tandem.

This governance fuels visibility. When AI systems encounter precise, well-maintained schema, they can map entities faster, understand relationships, and trust that claims reflect current offerings. Combined with rich narrative content, this creates a flywheel where visibility improvements reinforce the value of governance, encouraging further investment.

Cross-Functional Collaboration and Ownership

AI visibility crosses departmental boundaries. Marketing leads the narrative, SEO guides technical implementation, product marketing owns positioning, analytics monitors measurement, and engineering supports tooling. Without collaboration, visibility efforts stall. With collaboration, they thrive.

Successful teams define clear ownership. One group maintains the entity registry. Another manages prompt testing. Editorial ensures tone and clarity. UX guarantees accessibility and extractability. Legal reviews sensitive claims. Executive sponsors advocate for resources and ensure visibility informs strategic planning. This shared ownership mirrors the shared stakes—visibility affects brand perception, pipeline acceleration, and customer trust all at once.

Communication routines keep everyone aligned. Weekly standups review progress. Monthly readouts summarize visibility trends for leadership. Shared workspaces document experiments, results, and next steps. When visibility shifts, the team can respond quickly because communication infrastructure already exists.

Visibility Lifecycle and Refresh Strategy

Visibility work follows a lifecycle. New content launches with high visibility potential because it addresses emerging questions. Over time, narratives drift, products evolve, and user expectations change. Without refreshes, visibility decays. Teams therefore schedule refresh cadences based on topic criticality. Core offerings may receive quarterly reviews. Evergreen explainers might be audited twice a year. Niche topics follow a slower cadence but remain on the radar.

Refresh work includes updating facts, expanding coverage, reorganizing sections, revisiting schema, and revalidating entity references. Teams document what changed and why, then rerun visibility scans. By treating content as a living artifact, they sustain presence in AI answers even as the landscape evolves.

Narrative Design for AI Answers

Designing narratives for AI answers means writing with reuse in mind. Longer-form content can still carry personality, but it must also provide modular clarity. Writers outline sections around questions the assistant might receive. They embed definitions near first mention rather than assuming prior knowledge. They highlight decision criteria, compare options, and address counterarguments so that the assistant has material to draw from when advising users.

Storytelling remains crucial. AI systems cannot cite what does not exist, so teams articulate their perspective in detail. They explain why their approach works, how it differs from alternatives, and what philosophy guides their recommendations. They back claims with transparent reasoning. This depth gives the assistant confidence to reuse their narrative. It also differentiates the brand because the AI cannot invent your point of view—it can only surface what you documented.

Continuous Listening and Market Intelligence

Maintaining visibility requires a listening practice that goes beyond search engine updates. Teams monitor community discussions, industry forums, analyst briefings, and customer advisory councils to understand how the conversation evolves. They catalog new questions, emerging terminology, and shifting concerns that buyers raise. This intelligence feeds the content roadmap, ensuring that future updates align with lived conversations instead of assumptions.

Listening also includes observing how AI assistants adapt over time. Interfaces experiment with new layouts, citation conventions, and summarization styles. Teams capture interface changes, note how their content renders within those changes, and adjust structure accordingly. For instance, when an assistant begins highlighting step-by-step instructions, visibility leads verify that their instructions include numbered lists, clear prerequisites, and concise outcomes. When engines introduce industry filters, teams ensure their schema provides the signals needed to qualify for those filters.

To synthesize these inputs, teams maintain market intelligence briefs that summarize weekly or monthly findings. Each brief highlights conversation trends, assistant behavior updates, potential risks, and follow-up actions. Stakeholders use these briefs to align messaging, prioritize research, and validate that visibility work mirrors real-world needs. The result is a responsive practice that treats market listening as a core competency, not an afterthought.

Playbooks for Different Team Sizes

Visibility practices scale differently depending on team size. Large enterprises often build dedicated squads with specialized roles for entity stewardship, schema engineering, and AI monitoring. They implement governance platforms, integrate visibility data into business intelligence systems, and run formal change management. Smaller teams rely on lightweight rituals: a shared spreadsheet of prompts, a monthly schema review session, and documented guidelines that help contractors produce AI-ready content. Both approaches can succeed when tailored to resources.

For lean teams, the key is prioritization. They focus on the highest-impact topics, automate wherever possible, and sequence improvements in sprints. They may adopt templates for entity descriptions, leverage existing analytics tools to track visibility indicators, and partner with subject-matter experts to co-create comprehensive resources. They document processes meticulously so that institutional knowledge persists even when team members multitask across roles.

Mid-sized teams often blend both approaches. They establish centers of excellence for schema governance and content operations while empowering satellite teams to implement recommendations. They run training workshops, maintain knowledge bases, and provide office hours for troubleshooting. Regardless of size, the guiding principle remains: clarity, consistency, and collaboration. Playbooks simply encode those principles into actions that match the team’s capacity.

Future-Proofing Your AI Visibility Strategy

Future-proofing requires acknowledging that AI interfaces will continue to evolve. Emerging modalities—voice assistants, multimodal search, augmented reality overlays—will ask different questions of your content. Preparing for that future means designing assets that travel well across contexts. Provide descriptive alt text for imagery, craft transcripts for multimedia, and ensure structured data reflects sensory experiences where relevant. The more adaptable your content, the easier it becomes for new interfaces to understand and cite you.

Future-proofing also involves ethical considerations. AI systems increasingly evaluate whether sources honor accessibility, privacy, and responsible communication. Teams that embed ethical guidelines into their visibility practice reduce the risk of being filtered out by evolving trust requirements. That includes honoring consent in case studies, clarifying data usage, and aligning claims with verifiable evidence. Responsible storytelling becomes a visibility enabler.

Finally, prepare for organizational change. Document roles, maintain training programs, and invest in tooling that democratizes visibility data. Encourage experimentation so teams can adapt when engines release new features. By cultivating a culture of curiosity and resilience, you ensure that AI visibility remains a strategic asset even as the landscape shifts.

Designing the AI Visibility Dashboard

An effective dashboard makes visibility tangible. It highlights composite scores, breaks them into dimensions, showcases trends, and links to qualitative insights. Many teams include a section for “Wins” that lists new citations or improved answer fidelity. Another section tracks “Watchlist Topics” that require attention. Interactive filters let stakeholders explore visibility by product line, persona, or funnel stage.

The dashboard also integrates with existing analytics. Stakeholders can see how visibility shifts correlate with pipeline, retention, or brand sentiment. When the assistant starts recommending a new product bundle, the dashboard captures the change and alerts revenue teams. When visibility dips in a strategic narrative, leadership can spot the issue before it affects market perception. The dashboard becomes the hub for AI-era search intelligence.

AI Visibility Score FAQ

How often should we update our AI Visibility Score?

Most teams update monthly so they can detect changes without amplifying noise. Critical launches or major algorithm shifts may trigger ad-hoc scans. The cadence should balance responsiveness with sustainability. Too frequent, and the signal becomes noisy. Too infrequent, and issues linger unnoticed.

Which engines belong in the monitoring set?

Include engines your audience actually uses. For many brands that includes Google AI Overviews, Microsoft Copilot, Perplexity, and specialized assistants in your industry. If you sell into enterprises, monitor vendor selection assistants. If you serve consumers, track the AI experiences embedded in search, messaging, and shopping platforms.

What counts as a citation?

Any explicit attribution, hyperlink, or direct mention that the assistant uses to ground its answer. Some teams also count paraphrased references when the assistant quotes unique phrasing or frameworks that clearly originate from your content. Document your definition so scoring remains consistent.

Action Plan: Turning Insight into a 2026 Roadmap

Building AI visibility momentum requires a deliberate roadmap. Start by establishing a baseline: audit current citations, evaluate each dimension, and inventory entity definitions. Next, prioritize topics that align with strategic goals. For each topic, define the target narrative, map existing assets, and identify gaps. Assign owners, set timelines, and integrate visibility checkpoints into your content production workflow.

As improvements roll out, document experiments. Record which changes moved the needle, which did not, and which require more investigation. Share wins with stakeholders to reinforce the value of the practice. Align visibility objectives with broader marketing and product milestones so that the work supports launches, campaigns, and customer enablement. The more visibility integrates with core initiatives, the more sustainable it becomes.

Final Takeaway

An AI Visibility Score does not measure how well you rank. It measures whether AI systems know who you are, understand what you do, trust your content, and reuse your answers. In an answer-driven search world, that matters more than rankings. By investing in entity clarity, semantic depth, structural extractability, and citation safety, you ensure that AI assistants describe your brand accurately and consistently—even when users never click through to your site.